News

Disallowed URLs will still collect links says Google

If you are into SEO, you would know that there is a concept of Disallowing links. This basically means that you are telling Google not to index the URL in their search results. To do this, you have to add some tags to your robots.txt and then Google’s crawler bots will not be able to index those links.

Now, this is done when you know that a link is not important or if someone is trying to spam your website with harmful links. Thus, if any URL is disallowed then it means Google or any other crawler should not collect links from that URL. However, we now have a new explanation from Google’s John Mu regarding disallowed links.

In Twitter conversation with a user, John Mu said that “If a URL is disallowed for crawling in the robots.txt, it can still “collect” links, since it can be shown in Search as well (without its content though). This obviously caused some confusion in the SEO Groups around the world. Because we all had the notion till now that Disallowed links or URLs cannot collect links.

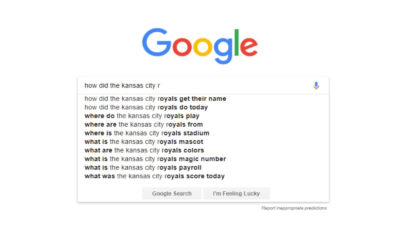

To explain this, we should take the example of Google Search results. You must have seen links in Google Search results which say that no information is available for this domain. Basically, they have disallowed links.

However, you see them because Google has found them relevant for that particular search results. If any website or multiple websites are linking to that particular URL, Google’s crawler sees it as an important URL. It does not matter whether it is a disallowed URL or not.

What this means is that you can build links for a Disallowed URL as well. However, it must be said that it is of no relevance as Google’s crawler will not be able to see content of the URL.

-

Domains5 years ago

Domains5 years ago8 best domain flipping platforms

-

Business5 years ago

Business5 years ago8 Best Digital Marketing Books to Read in 2020

-

How To's6 years ago

How To's6 years agoHow to register for Amazon Affiliate program

-

How To's6 years ago

How To's6 years agoHow to submit your website’s sitemap to Google Search Console

-

Domains4 years ago

Domains4 years agoNew 18 end user domain name sales have taken place

-

Business5 years ago

Business5 years agoBest Work From Home Business Ideas

-

How To's5 years ago

How To's5 years ago3 Best Strategies to Increase Your Profits With Google Ads

-

Domains4 years ago

Domains4 years agoCrypto companies continue their venture to buy domains