Business

Intel unveils its latest AI chip to power the LLMs and compete with Nvidia, AMD

We know that LLMs or large-language-models are extremely power-hungry and they need heavy GPUs in order to run which is also the reason why people worry about the likes of OpenAI who have been deploying their GPUs to power chatbots such as ChatGPT and gain nothing much in return. But the fact that Microsoft is behind them is a positive sign for them. Now, Intel has launched its first AI GPU named as Gaudi3 which will power the next generation of AI models.

A report mentions that “The most prominent AI models, like OpenAI’s ChatGPT, run on Nvidia GPUs in the cloud. It’s one reason Nvidia stock has been up nearly 230% year to date while Intel shares have risen 68%. And it’s why companies like AMD and, now Intel, have announced chips that they hope will attract AI companies away from Nvidia’s dominant position in the market”. It is also revealed that “Gaudi3 will compete with Nvidia’s H100, the main choice among companies that build huge farms of the chips to power AI applications, and AMD’s forthcoming MI300X, when it starts shipping to customers in 2024.”

Intel CEO mentioned at the launch event that “We’ve been seeing the excitement with generative AI, the star of the show for 2023,” and added “We think the AI PC will be the star of the show for the upcoming year,” and that its new Intel Ultra Core processors will come into play as well. The Core Ultra won’t provide the same kind of power to run a chatbot like ChatGPT without an internet connection, but can handle smaller tasks. For example, Intel said, Zoom runs its background-blurring feature on its chips. They’re built using the company’s 7-nanometer process, which is more power-efficient than earlier chips.

The company also mentioned that “Intel’s fifth-generation Xeon processors power servers deployed by large organizations like cloud companies. Intel didn’t share pricing, but the previous Xeon cost thousands of dollars. Intel’s Xeon processors are often paired with Nvidia GPUs in the systems that are used for training and deploying generative AI. In some systems, eight GPUs are paired to one or two Xeon CPUs”.

-

Domains5 years ago

Domains5 years ago8 best domain flipping platforms

-

Business5 years ago

Business5 years ago8 Best Digital Marketing Books to Read in 2020

-

How To's6 years ago

How To's6 years agoHow to register for Amazon Affiliate program

-

How To's6 years ago

How To's6 years agoHow to submit your website’s sitemap to Google Search Console

-

Domains4 years ago

Domains4 years agoNew 18 end user domain name sales have taken place

-

Business5 years ago

Business5 years agoBest Work From Home Business Ideas

-

How To's5 years ago

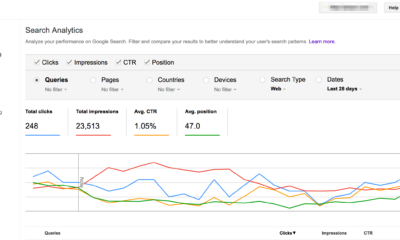

How To's5 years ago3 Best Strategies to Increase Your Profits With Google Ads

-

Domains4 years ago

Domains4 years agoCrypto companies continue their venture to buy domains