News

Googlebot discloses that crawlers use just 15 MB

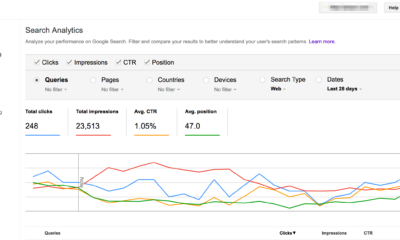

Googlebot pointed out that only 15 MB of web pages are crawled by it. The disclosure was during the update in the help document. It indeed shocked the SEO community. This means that Googlebot is disregarding the text under images.

Google stated in the help document, “Any resources referenced in the HTML such as images, videos, CSS and JavaScript are fetched separately. After the first 15 MB of the file, Googlebot stops crawling and only considers the first 15 MB of the file for indexing. After that, the file size limit applies to the uncompressed data”. However, John Mueller explains that it is for only the HTML file via Twitter.

Googlebot weighs the webpage when the important content is on the first page. This requires the code to structure the SEO in the first 15 MB. It is especially for the HTML or support text files. This also explained the need for images and video compressed in those files.

To make sure that Googlebot crawls and recognizes HTML pages, it is important to keep them under the size of 100 KB. Google checks page size with tools like Google Page Speed Insights. There is also a need for content on-page. To begin indexing, it is important to practice on-page SEO.

Google previously stated that images and videos are separate. To go on with the document, Googlebot’s 15 MB cut-off is for HTML files only.

However, it does put the community in trouble. It will be difficult to go within the limit. This will be like putting all the main information on a single page. It may disturb the flow of the content.

Lastly, the point to remember is to encode the images and videos in HTML files. In that case, Googlebot will surely be crawling through them. The new document explained, and the SEO community must keep this in mind for the future.

-

Domains5 years ago

Domains5 years ago8 best domain flipping platforms

-

Business5 years ago

Business5 years ago8 Best Digital Marketing Books to Read in 2020

-

How To's6 years ago

How To's6 years agoHow to register for Amazon Affiliate program

-

How To's6 years ago

How To's6 years agoHow to submit your website’s sitemap to Google Search Console

-

Domains4 years ago

Domains4 years agoNew 18 end user domain name sales have taken place

-

Business5 years ago

Business5 years agoBest Work From Home Business Ideas

-

How To's5 years ago

How To's5 years ago3 Best Strategies to Increase Your Profits With Google Ads

-

Domains4 years ago

Domains4 years agoCrypto companies continue their venture to buy domains